Comparing AI API Gateways

AI Gateways are rapidly becoming essential tools for businesses leveraging artificial intelligence and large language models (LLMs). Traditional API Gateways have evolved to meet the demands of modern AI workloads, offering features that cater to the unique requirements of AI/ML applications. These include advanced routing for models, latency management, and comprehensive analytics to optimize performance. AI API Gateways integrate and manage AI frameworks to enhance application performance and governance, ensuring ease of use and code simplicity for developers migrating existing AI applications.

The leading players in the AI API Gateway space each bring unique capabilities to address the demands of AI/ML applications. Moesif’s analytics platform complements these gateways by offering businesses a way to track costs, monitor tenant-specific metrics, and optimize LLM app performance. This combination provides the insights needed to scale AI-powered solutions effectively, without explicitly depending on a single gateway.

What is an AI API Gateway?

AI API Gateways extend the capabilities of traditional API Gateways to address the unique demands of AI workloads. While standard gateways focus on routing and securing API traffic, AI Gateways can offer advanced features like model-specific routing, data governance, latency optimization, and real-time observability. These tools enhance the performance of AI infrastructure by facilitating automated monitoring of machine learning (ML) pipelines, data, and models, ensuring smooth operation and reliability. They ensure seamless integration with AI/ML services while maintaining robust security and compliance.

Key Features of AI API Gateways

-

Model Routing: AI workloads often involve multiple models that specialize in different tasks, such as text generation, image recognition, or natural language processing. AI API Gateways intelligently route incoming requests to the most appropriate model based on the type of workload, ensuring efficient processing and optimal utilization of computational resources. This routing is often dynamic, taking into account factors such as model performance, availability, and workload priority.

-

Latency Management: Delivering low-latency responses is crucial for real-time AI applications, such as chatbots and recommendation engines. AI Gateways leverage sophisticated techniques like traffic shaping, caching, and load balancing to reduce response times. By ensuring minimal delays, they enhance user experience and support the performance requirements of latency-sensitive applications.

-

Data Governance: Handling sensitive data, such as user information or proprietary datasets, requires stringent governance policies. AI API Gateways provide tools to manage data access securely, enforce compliance with regulations like GDPR or HIPAA, and maintain audit trails. These features are critical for businesses operating in highly regulated industries, ensuring that their AI applications meet legal and ethical standards.

-

Observability: Effective observability is key to maintaining and improving the performance of AI services. AI observability provides comprehensive insights into machine learning model behavior, data quality, and performance throughout their lifecycle. AI Gateways offer observability solutions that facilitate root cause analysis, enabling businesses to proactively monitor and address issues within ML models. By leveraging these insights, businesses can optimize performance, ensure model reliability, and enhance the overall effectiveness of their AI applications.

The Role of API Gateways in API Management

API gateways play a pivotal role in API management by serving as the single entry point for clients to access backend services. They provide a crucial layer of abstraction between the client and the backend services, enhancing security, scalability, and performance. By handling tasks such as authentication, rate limiting, caching, and routing, API gateways simplify the management and monitoring of API traffic. This centralized platform allows for the enforcement of security policies, implementation of traffic management rules, and seamless integration with backend services. In essence, API gateways streamline the complexities of API management, ensuring efficient and secure interactions between clients and backend services.

Benefits of Using an API Gateway

Utilizing an API gateway offers numerous benefits, including enhanced security, scalability, and performance. API gateways act as a protective shield for backend services, providing robust security and authentication mechanisms to guard against external threats. They also improve scalability by efficiently managing high volumes of API traffic through load balancing and caching capabilities. Additionally, API gateways offer real-time analytics and performance metrics, enabling organizations to monitor and optimize API performance effectively. By leveraging these features, businesses can ensure their backend services remain secure, scalable, and performant, ultimately delivering a superior user experience.

API Gateway Architecture

An API gateway typically comprises several key components, including a reverse proxy, a routing engine, and a security module. The reverse proxy serves as the entry point for API requests, directing them to the appropriate backend service. The routing engine ensures that requests are efficiently routed based on predefined rules, optimizing the flow of API traffic. The security module provides essential features such as authentication, rate limiting, and encryption to safeguard backend services. API gateways can be deployed on-premises, in the cloud, or in a hybrid environment, offering flexibility to meet diverse API management needs. This architecture ensures that API gateways can effectively manage and secure API interactions across various deployment scenarios.

Key Players in the AI API Gateway Space

WSO2 AI Gateway

WSO2 helps you build, scale, and manage AI APIs using AI Gateway in both the open-source API Manager (APIM) and the SaaS API management platform Bijira. It puts a strong emphasis on governance and guardrails for safe, compliant, and transparent AI use, while keeping business and customer objectives aligned.

Key features include:

- Secure creation of AI APIs with leading vendors like OpenAI, Azure OpenAI, Anthropic Claude, Amazon Bedrock, and Mistral, including custom AI vendors

- Dynamic routing of AI API requests across multiple models within a vendor

- Built-in and external guardrails, including Azure Content Safety and Amazon Bedrock Guardrails

- Built-in observability and governance to manage traffic, track usage, and optimize performance

With these features, WSO2 AI Gateway enables organizations of all sizes to drive superior outcomes with their AI-powered applications.

Kong

Kong’s AI Gateway focuses on extensibility and integration, making it ideal for businesses deploying AI/ML applications. Built on Kong’s core platform, the AI Gateway is designed to securely manage and route traffic between applications and AI services. It includes features such as centralized governance, advanced observability, and native integrations with AI/ML tools. Its robust plugin ecosystem and API lifecycle management tools simplify the integration of AI models into existing workflows, enabling businesses to scale AI adoption seamlessly.

Solo.io

Solo.io emphasizes flexibility and performance, offering real-time model orchestration designed for modern AI/ML environments. The Gloo AI Gateway leverages Solo.io’s Envoy-based architecture to deliver advanced traffic control, fine-grained security, and seamless integration with AI/ML frameworks. This architecture supports large-scale AI workloads with features like intelligent request routing, policy enforcement, and low-latency data processing, ensuring that critical applications perform reliably under demanding conditions.

Cloudflare

Cloudflare combines its extensive network capabilities with AI Gateway features to optimize performance, scalability, and security for AI applications. The Cloudflare AI Gateway offers seamless integration with AI models and tools while providing powerful features like API security, rate limiting, and advanced traffic management. Its ability to handle large-scale AI interactions ensures that businesses can scale their operations efficiently, while features like DDoS protection and data privacy controls make it a reliable choice for enterprises prioritizing security.

AWS

AWS integrates its AI Gateway services seamlessly into its cloud ecosystem, offering tools for cost control, scalability, and advanced security. AWS offers a fully managed service for building and scaling generative AI applications with AWS Bedrock, allowing businesses to access foundation models via simple API calls without managing infrastructure. This approach allows for streamlined integration, cost-effective scaling, and flexible deployment options. Businesses can leverage AWS’s vast suite of cloud services to efficiently manage their AI workloads while benefiting from advanced monitoring and model customization capabilities.

Azure

Microsoft Azure connects its AI Gateway capabilities to its AI and ML ecosystem, providing enterprise-grade observability, governance, and seamless model integration. With the GenAI Gateway capabilities in Azure API Management, businesses can now simplify interactions with generative AI services while ensuring scalability and security. This includes features such as automated API generation for AI models, secure key management, and enhanced monitoring tools. Azure’s strong focus on compliance and data privacy, coupled with its deep integration into the Microsoft ecosystem, makes it a preferred choice for large organizations and enterprises managing sophisticated AI workflows.

Comparison Table

| Gateway | Strengths | Ideal For |

|---|---|---|

| WSO2 AI Gateway | Intelligent routing, MCP support, centralized management, governance, and observability with full lifecycle API management | Projects and businesses of any scale looking to adopt and scale GenAI services |

| Kong | Centralized management through cloud control plane, advanced observability, plugin ecosystem | Extensible AI/ML integrations |

| Solo.io | Intelligent routing, fine-grained security, low-latency processing | Large-scale, latency-sensitive workloads |

| Cloudflare | Scalability, API security, advanced traffic management, DDoS protection | Enterprises with high traffic demands |

| AWS | Simplified API integration with foundation models, flexible scaling, cost-effective | Cloud-native AI and generative AI deployments |

| Azure | Automated API generation, secure key management, compliance focus | Enterprises scaling generative AI apps |

Security and Compliance

API gateways are equipped with a range of security features designed to protect backend services. These include authentication mechanisms to verify user identities, rate limiting to control the flow of API traffic, and encryption to secure data in transit. Additionally, API gateways help organizations comply with industry standards and regulations, such as PCI-DSS and HIPAA, by providing tools to enforce security policies and maintain audit trails. They also offer real-time monitoring and analytics to detect and respond to security threats promptly. By leveraging these capabilities, businesses can ensure their backend services are secure and compliant with regulatory requirements.

Scalability and Performance

API gateways are engineered to handle high volumes of API traffic, providing the scalability and performance needed for modern applications. They offer load balancing and caching capabilities to improve response times and reduce the load on backend services. Real-time analytics and performance metrics enable organizations to monitor and optimize API performance continuously. Additionally, API gateways support multiple protocols, including HTTP, HTTPS, and WebSocket, making them a versatile solution for API management. By ensuring efficient traffic management and performance optimization, API gateways help businesses deliver reliable and responsive services to their users.

The Importance of Analytics for AI/LLM Apps

Deploying AI and LLM applications demands a focus on monitoring and optimizing performance to maintain efficiency and scalability. Detailed insights into AI infrastructure operations are critical for maintaining efficiency and scalability. These workloads often incur significant computational costs and resource consumption, making detailed insights into operations critical. Granular analytics help organizations manage costs, streamline resource allocation, and maintain operational excellence, all while ensuring AI solutions meet the growing demands of modern applications. Some key challenges addressed by analytics include:

- Cost Attribution: Managing the costs of AI workloads can be challenging, especially when multiple models and tenants are involved. Advanced analytics enable businesses to allocate costs accurately by associating them with specific models or customers. This level of granularity helps organizations identify the most resource-intensive models, evaluate their return on investment, and make informed decisions about resource allocation and cost optimization.

- Performance Optimization: AI applications, especially those with real-time requirements, demand peak performance to meet user expectations. Analytics platforms provide deep insights into performance metrics such as latency, throughput, and error rates. By identifying inefficiencies and bottlenecks, businesses can fine-tune their infrastructure, improve response times, and ensure a seamless user experience even during peak usage.

- Real-Time Monitoring: The dynamic nature of AI workloads necessitates constant monitoring to ensure stability and reliability. Real-time analytics tools detect anomalies such as unexpected spikes in latency or errors, enabling teams to address potential issues before they escalate. This proactive approach minimizes downtime, enhances system reliability, and ensures consistent performance for end-users.

Moesif tackles these challenges with advanced analytics tailored specifically to the complexities of AI workloads. By offering granular insights into key areas such as cost attribution, performance metrics, and real-time monitoring, Moesif empowers organizations to make data-driven decisions. For instance, businesses can track high-usage clients, optimize latency-sensitive operations, and proactively address system inefficiencies. These capabilities not only ensure effective management of AI investments but also foster scalability and operational excellence in dynamic environments.

Moesif’s Role in AI Gateway Analytics and API Traffic

Moesif’s analytics platform complements AI API Gateways by offering key capabilities in AI observability and integrating seamlessly with various gateway solutions like WSO2, Kong, Solo.io, Cloudflare, AWS, and Azure. By bringing all your analytics into one centralized platform, Moesif enables organizations to manage their API traffic and performance metrics across multiple gateways. This unified approach reduces complexity, enhances visibility, and ensures consistent data-driven decision-making across diverse infrastructures.

- Cost Attribution: Moesif enables organizations to track the costs associated with individual models or tenants in great detail. By offering granular insights into usage patterns, businesses can identify the specific AI models or users driving the highest costs. This level of visibility allows companies to implement targeted cost-control measures, optimize billing structures, and ensure financial sustainability for their AI operations. Additionally, these insights help align costs with value, improving overall operational efficiency.

- Performance Metrics: Moesif empowers businesses to monitor critical performance indicators, including response times, throughput, and error rates. These metrics provide a comprehensive view of how AI applications are functioning in real-time. By identifying performance bottlenecks and inefficiencies, Moesif helps organizations fine-tune their infrastructure to ensure consistent and reliable service delivery. This focus on performance optimization supports a seamless user experience and enhances customer satisfaction.

- Real-Time Alerts: Moesif’s real-time alerting capabilities allow businesses to proactively detect anomalies and inefficiencies in their AI/LLM workloads. Whether it’s a sudden spike in latency, an unexpected error rate, or unusual usage patterns, Moesif provides timely notifications that enable rapid response. By addressing issues before they escalate, organizations can minimize downtime, maintain system stability, and ensure uninterrupted service for end-users.

By integrating Moesif with gateways like WSO2, Kong, Solo.io, Cloudflare, AWS, and Azure, businesses can gain unparalleled visibility into their AI applications. Moesif’s detailed analytics enable organizations to track API usage trends, monitor performance bottlenecks, and attribute costs to specific models or tenants. This integration fosters smarter decision-making by providing actionable insights that drive operational efficiency, reduce costs, and enhance user experiences. Through this synergy, businesses can optimize their AI deployments while ensuring scalability and sustainability.

Use Case: Analytics for LLM Apps and Model Performance

Consider a business deploying LLM-based machine learning applications. These applications often handle high volumes of API traffic, requiring real-time monitoring and precise cost management to remain efficient and scalable. With Moesif, they can achieve this by leveraging our comprehensive analytics platform, which provides insights into key performance indicators, usage patterns, and operational inefficiencies. Importantly, Moesif supports setups that utilize multiple API gateways, allowing businesses to centralize their analytics across WSO2, Kong, Solo.io, Cloudflare, and other gateway solutions. By incorporating Moesif, businesses gain the ability to streamline workflows, reduce costs, and deliver superior performance to end-users.

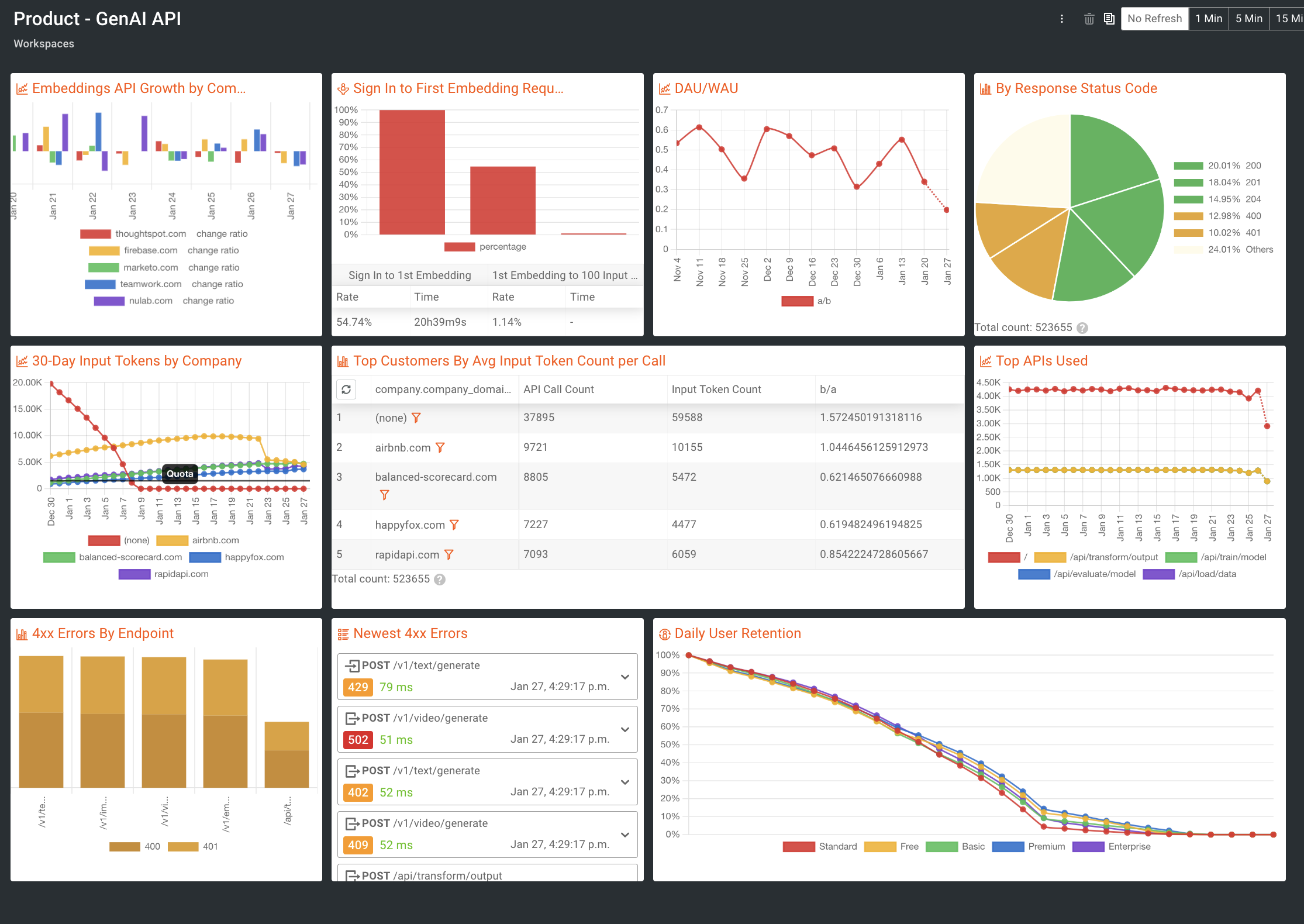

- Monitor Costs: Moesif provides detailed insights into cost attribution by breaking down expenses for specific models or customers. For instance, businesses using a Gen AI API can leverage Moesif to analyze input token usage for each customer and could create a dashboard like “Top Customers By Avg Input Token Count per Call”. This visibility allows companies to track high-usage clients and assess their contribution to overall operational costs. By identifying such patterns, businesses can implement usage-based pricing strategies or optimize resources allocated to high-demand clients, ensuring accurate billing and cost efficiency.

- Optimize Resources: Moesif’s analytics enable organizations to identify resource-intensive patterns and take proactive steps to optimize them. For example, a “30-Day Input Tokens by Company” chart could highlight usage trends across customers, with certain accounts nearing quota limits. This information helps teams predict potential resource bottlenecks and allocate server capacity or computational power more effectively. By acting on these insights, businesses can improve service reliability and reduce latency for their top-performing APIs.

- Improve Performance: Moesif helps businesses enhance their API performance by monitoring latency issues and error rates in real time. For instance, creating a “4xx Errors by Endpoint” or “Newest 4xx Errors” chart can provide granular error tracking, enabling teams to quickly address issues. Coupled with performance metrics from something like “Top APIs Used” and “Daily User Retention” graphs, Moesif equips teams with the tools to prioritize fixes and ensure a smooth experience for users.

Moesif’s advanced analytics make scaling AI apps more predictable and cost-efficient by providing deep insights into user behavior, performance metrics, and cost attribution. For example, businesses deploying LLM applications can leverage Moesif to monitor API usage trends, identify high-cost models or customers, and optimize server capacity. This empowers organizations to fine-tune their operations, reduce unnecessary expenses, and enhance overall system performance, enabling them to grow their AI capabilities with confidence.

Conclusion

AI Gateways are revolutionizing the way organizations manage and scale AI/LLM applications. While WSO2, Kong, Solo.io, Cloudflare, AWS, and Azure provide robust solutions for routing and managing AI traffic, Moesif adds unparalleled value through its advanced analytics platform. By leveraging Moesif, businesses gain critical insights into cost management, performance optimization, and user behavior, making it an essential tool for scaling AI-powered solutions.

Sign up for Moesif’s analytics platform today and unlock the full potential of your AI applications—no credit card required.